i7242 | Xingyu Yan 一吃一大碗, 一睡一整天

Simple PLA

About PLA

This is only a simple/naive PLA that only on 2D for visualization, and didn't consider any noise or training/validation skills.

It follows the fundamental of PLA. Assume we have and . A line separates points into two categories.

Given , we know it classifies a point by:

Given its lable , if its classification is wrong, then:

Then we can update the / line by:

Implementation

The core part of PLA is simple as:

function pla_train(points, labels, iter = 500)

w = rand(3)

for i in 1:iter

for idx in 1:size(points)[2]

u = points[:, idx]'w

if u*labels[idx] < 0

w += points[:, idx]*labels[idx]

end

end

end

return w

end

function pla_predict(points, w)

labels = sign.(points'w)

endOther part of the code, including generation of train/test data set is here.

Test & Results

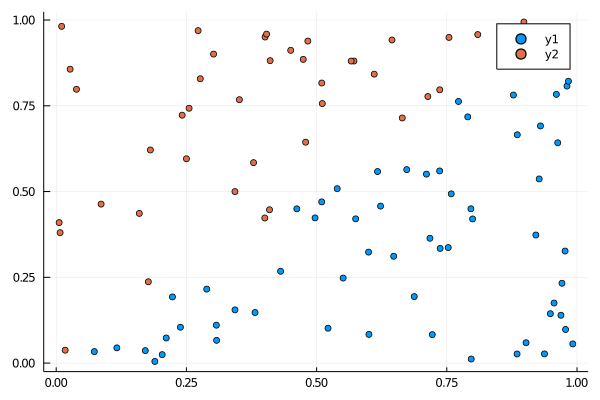

The points labeled by red/blue colours are ground truth labels.

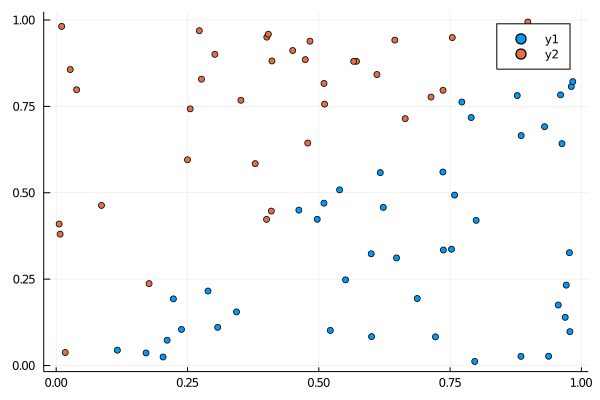

After training, the training data set is classified as:

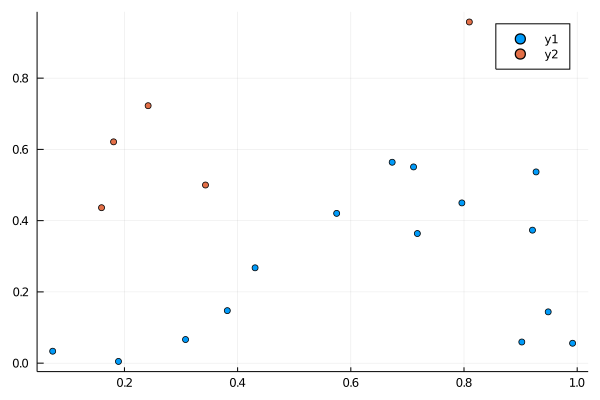

Use the testing data set, we got:

There were no miss classifications by counting:

sum(result_test .!= labels_test)This was because the generated data is linear separatable, whithout noise. It's too simple 😹😹.